3D Interaction

Given my previous project on 3D perception, it is only natural for me to start looking into how different types of visual information facilitate visually guided movements and in my case, targeted reaching.

Although ubiquitous in one's daily experience, visually guided reaching is complex and relies on both monocular and binocular.

Monocular Reaching

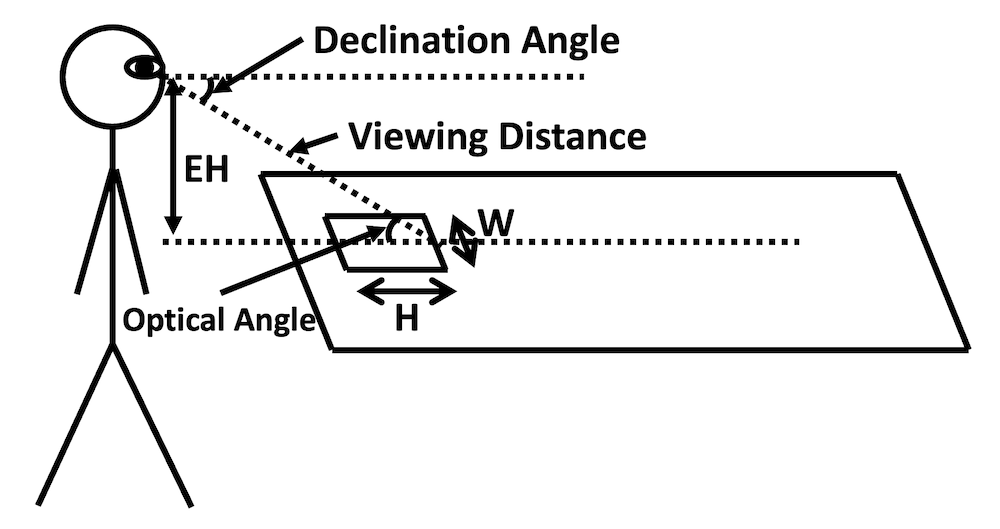

For monocular reaches, optical texture elements act as the primary source of information that specify relative distance between the hand and the target. In terms of the perceptual geometry, there are two factors governing the different aspects of optical texture: viewing distance and optical angle. Optical texture elements' width varies with the inverse of viewing distance, whereas their height varies with both the inverse of viewing distance and the optical angle. Finally, one can also derive texture image shape, or the pure foreshortening, as the ratio between the image height and image height.

We examined which aspect of the monocular texture element do the observers rely on when performing visually guided reaching movement. Leveraging the random dot displays created for the 3D perception project, I created a random dot virtual environment in which the participants control a virtual hand avatar using a joystick to reach to a target surface.

We independently changed aspects of the virtual environment to manipulate different aspects of the optical texture element. Using computational modeling, we predicted the reach distance when the participants relied on image width, image height, and image shape (pure foreshortening). We showed that pure foreshortening predicted the reach distance, not image width or height.

Related Publications

Herth, R.A., Wang, X.M., Cherry, O.C., & Bingham, G.P. (2021). Monocular guidance of reaches-to-grasp using visible support surface texture: Data and model. Experimental Brain Research, 239, 765-776. https://doi.org/10.1007/s00221-020-05989-3.

Bingham, G.P., Herth, R.A., Yang, P., Chen Z., & Wang, X.M. (2022). Investigation of optical texture properties as relative distance information for monocular guidance of reaching. Vision Research, 196(4): 108029. https://doi.org/10.1016/j.visres.2022.108029.

Binocular Reaching

For binocular reaches, binocular disparity provides the primary source of information for visual guidance. In this context, the goal is to move the hand so that the relative disparity between the target and the hand is reduced to 0.

In this study, we addressed the issue of whether an internal feedforward model is necessary for stable visually guided reaching given internal delays. Visually guided reaches commonly occur at around 1 second. Because of this short timeframe, feedback control could be unstable. While feedback in the context of targeted reaching is visual information about the temporally evolving relationship between the hand and the target used to keep the hand reliably converging on the target, instability is the failure to keep the hand reliably converging on the target where the hand significantly diverges from the target, typically exhibiting growing oscillation, yielding significant inaccuracy. The instability is produced by delay in feedback as a function of finite neural transmission times.

Using the above setup, we perturbed the mapping between the joystick's movement and the hand avatar for each trial to prevent the participants from establishing an effective internal model for online control. We showed that despite the perturbation, participants could accurately reach to the target with online visual feedback.

To account for this ability, we simulated reaches using a proportional rate model with disparity Tau (i.e., driving the relative binocular disparity between the hand and the target to 0) controlling the virtual Equilibrium Point in an Equilibrium Point (EP) model (i.e., the lambda model). We showed that our model could successfully predict the trajectories obtained from behavioral results.

Related Publications

Bingham, G.P., Wang, X.M., & Herth, R.A. (2023). Stable visually guided reaching does not require an internal feedforward model to compensate internal delay: Data and model. Vision Research, 203: 108152. https://doi.org/10.1016/j.visres.2022.108152.