3D Perception in VR

A head-mounted display (HMD) uses dual displays to provide binocular disparity, and low-latency motion-tracking to simulate active motion parallax. Leveraging both types of visual information, HMD-based VR aims to provide its users with a sense of immersion and being present in a virtual space.

Although both binocular disparity and motion parallax convey depth information in the physical environment, some intrinsic limitations of the HMD, such as the vergence-accommodation conflict and motion-to-photon latency, may affect depth perception in VR. Therefore, it is important to map the perceptual geometry of the virtual space conveyed through HMDs.

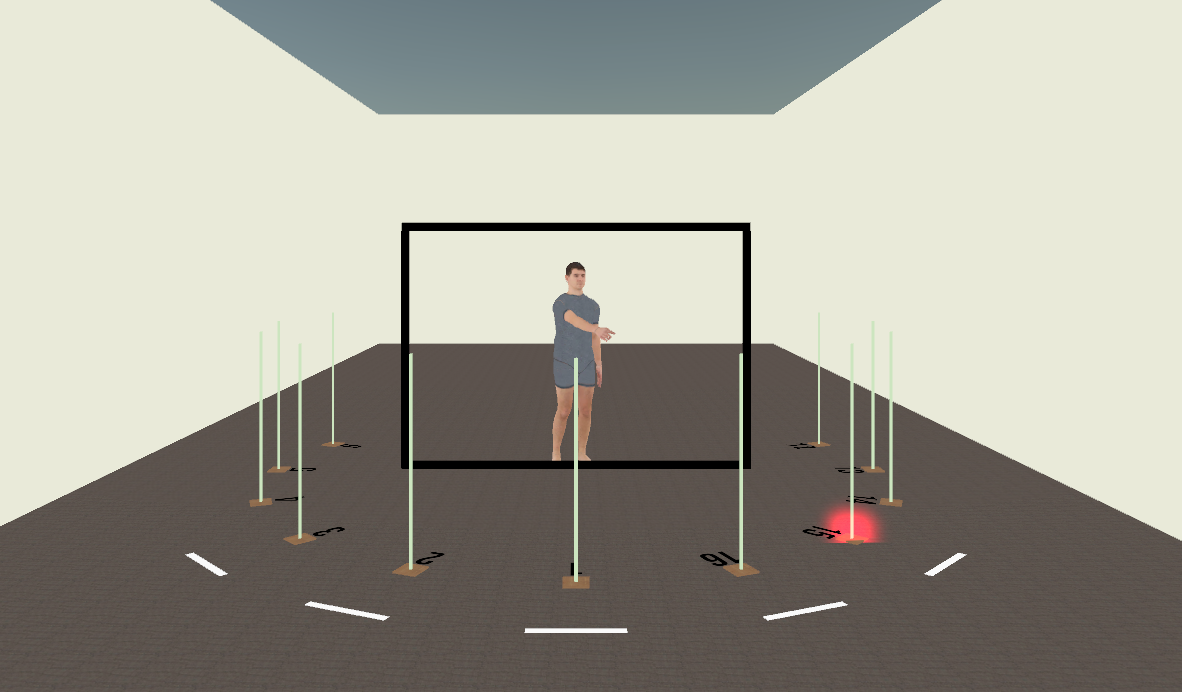

In this study, I used an exocentric pointing task to examine several aspects of the perceptual geometry. Specifically, the participants rotate a male pointer to point at one of the target poles.

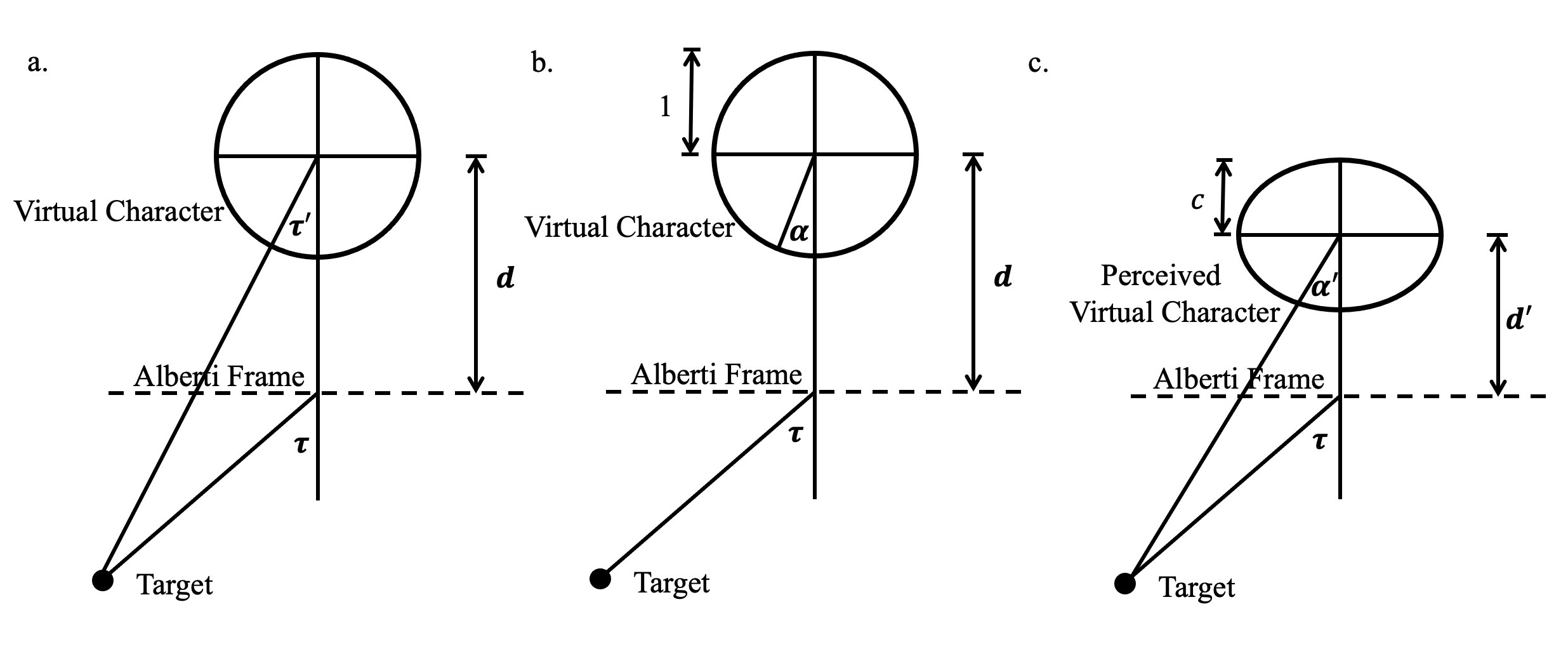

Accurate performance requires the participants to perceive the distance of the pointer relative to the target (distance perception), his depth dimension (3D shape perception), and his pointing direction (3D direction perception). Although we only measured the pointer's pointing angle, we developed a geometrical model to decompose the pointing angle to derive his perceived distance, 3D shape scaling, and pointing direction (see Wang & Troje (under review) for details).

Furthermore, to examine the respective effects of binocular disparity and motion parallax, we used a virtual display, called the Alberti Frame, that can independently manipulate the availability of binocular disparity and motion parallax of the rendered 3D content on the display. (Due to the limitations of the screen on which you are reading this, I will only demonstrate the effect of motion parallax.)

This is when motion parallax specifies the rendered 3D content (note that the pointer's pointing direction remains static as the observer moves around the display):

This is when motion parallax does not specify the rendered 3D content (note that the pointer follows the observer as the observer moves around the display; this is also why Mona Lisa and Uncle Sam always follow the spectators):

Based on the model optimization, we found that:

- Binocular disparity specifies distance, but it also introduces a relief depth expansion of the perceived pointer.

- Motion parallax specifies 3D direction, but if there is a conflict between motion parallax and Binocular disparity, the specified direction is distorted.

Check out the preprint below for more details.

Related Publications

Wang, X.M., Thaler, A., Eftekharifar, S., Bebko, A.O., & Troje, N.F. (2020). Perceptual distortions between windows and screens: Stereopsis predicts motion parallax. In 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), 685-686. https://doi.org/10.1109/VRW50115.2020.00193.

Wang, X.M., & Troje, N. (under review). Relating pictorial and visual space: Binocular disparity for distance, motion parallax for direction. Visual Cognition. https://doi.org/10.31234/osf.io/zfjvm.

Wang, X.M., & Troje, N. (in preparation). A unified model for relating visual and pictorial space.